Wei Yun Yau

TIDAL: Temporally Interleaved Diffusion and Action Loop for High-Frequency VLA Control

Jan 21, 2026Abstract:Large-scale Vision-Language-Action (VLA) models offer semantic generalization but suffer from high inference latency, limiting them to low-frequency batch-and-execute paradigm. This frequency mismatch creates an execution blind spot, causing failures in dynamic environments where targets move during the open-loop execution window. We propose TIDAL (Temporally Interleaved Diffusion and Action Loop), a hierarchical framework that decouples semantic reasoning from high-frequency actuation. TIDAL operates as a backbone-agnostic module for diffusion-based VLAs, using a dual-frequency architecture to redistribute the computational budget. Specifically, a low-frequency macro-intent loop caches semantic embeddings, while a high-frequency micro-control loop interleaves single-step flow integration with execution. This design enables approximately 9 Hz control updates on edge hardware (vs. approximately 2.4 Hz baselines) without increasing marginal overhead. To handle the resulting latency shift, we introduce a temporally misaligned training strategy where the policy learns predictive compensation using stale semantic intent alongside real-time proprioception. Additionally, we address the insensitivity of static vision encoders to velocity by incorporating a differential motion predictor. TIDAL is architectural, making it orthogonal to system-level optimizations. Experiments show a 2x performance gain over open-loop baselines in dynamic interception tasks. Despite a marginal regression in static success rates, our approach yields a 4x increase in feedback frequency and extends the effective horizon of semantic embeddings beyond the native action chunk size. Under non-paused inference protocols, TIDAL remains robust where standard baselines fail due to latency.

Piecewise Linear De-skewing for LiDAR Inertial Odometry

Aug 13, 2021

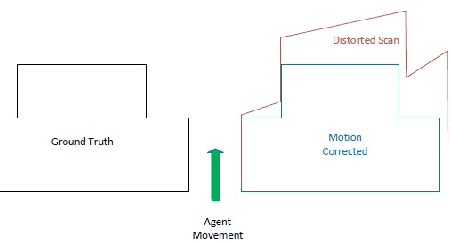

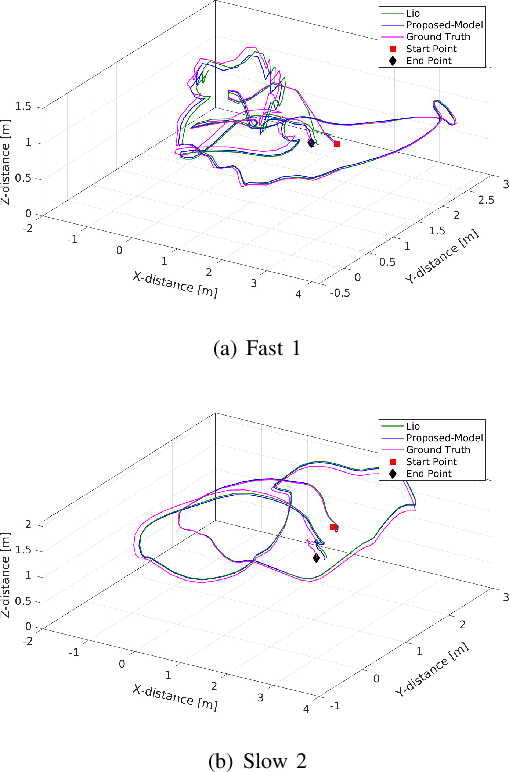

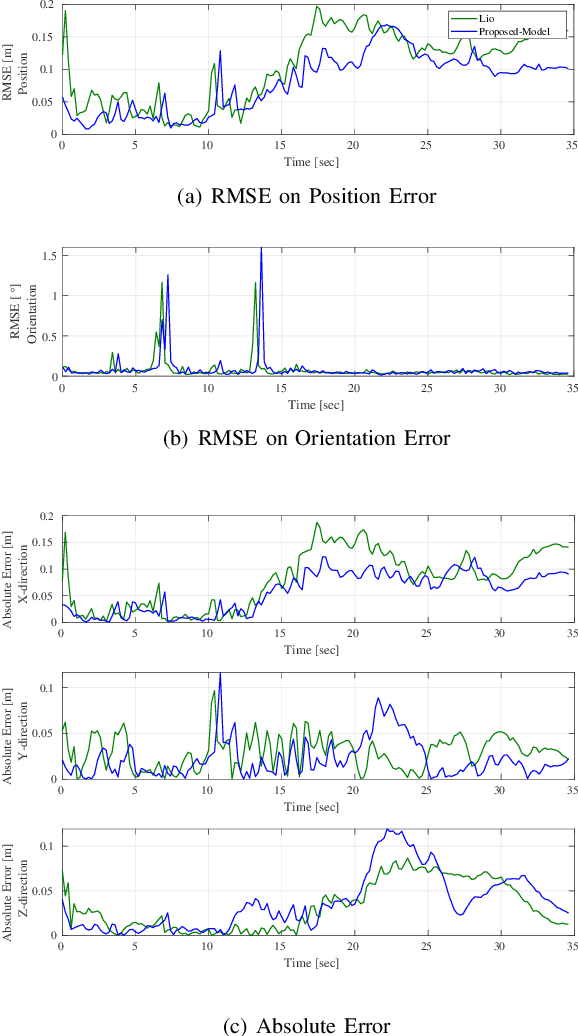

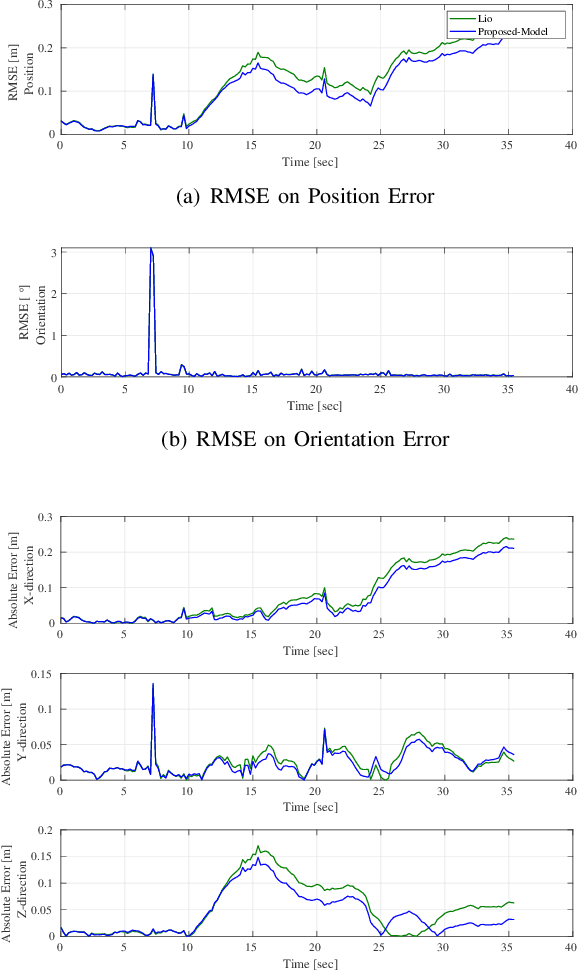

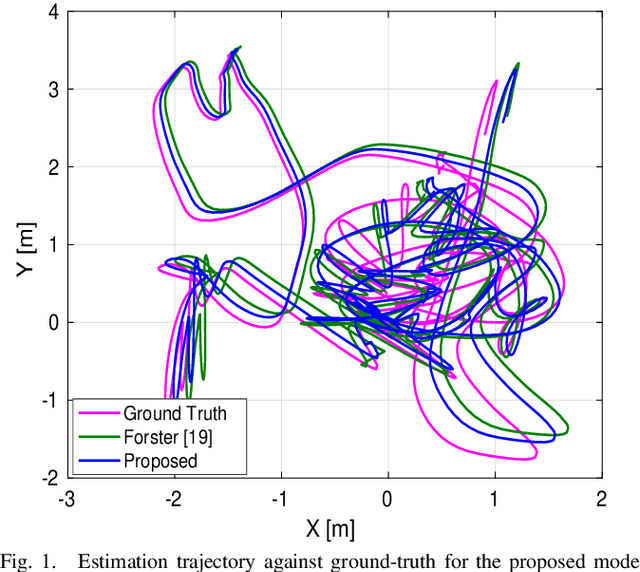

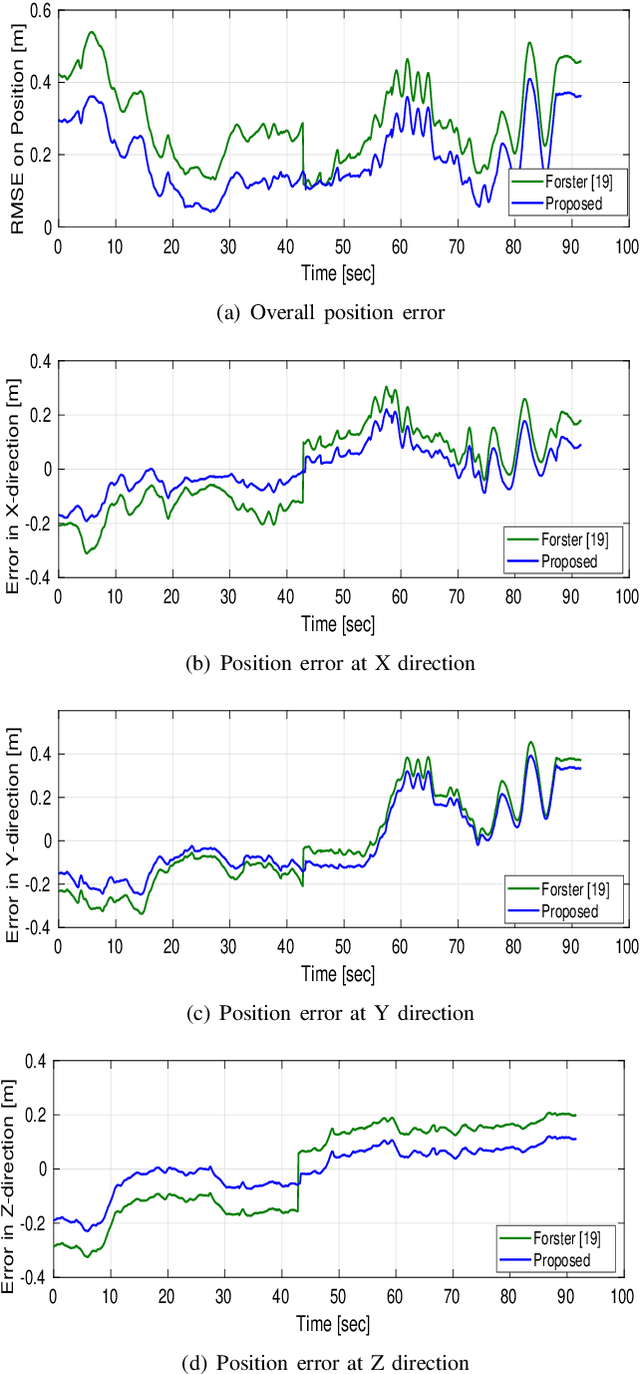

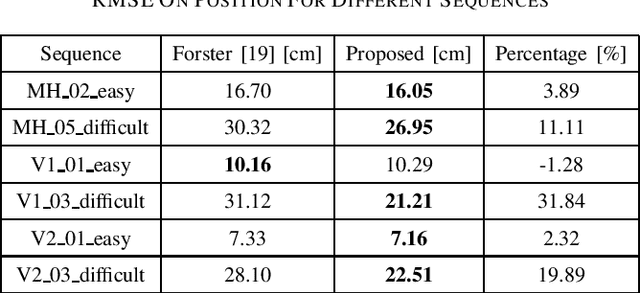

Abstract:Light detection and ranging (LiDAR) on a moving agent could suffer from motion distortion due to simultaneous rotation of the LiDAR and fast movement of the agent. An accurate piecewise linear de skewing algorithm is proposed to correct the motion distortions for LiDAR inertial odometry (LIO) using high frequency motion information provided by an Inertial Measurement Unit (IMU). Experimental results show that the proposed algorithm can be adopted to improve the performance of existing LIO algorithms especially in cases of fast movement.

Toward Underground Localization: Lidar Inertial Odometry Enabled Aerial Robot Navigation

Oct 29, 2019

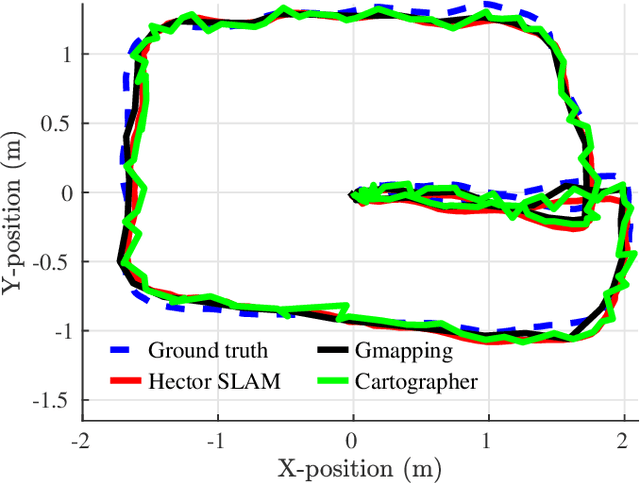

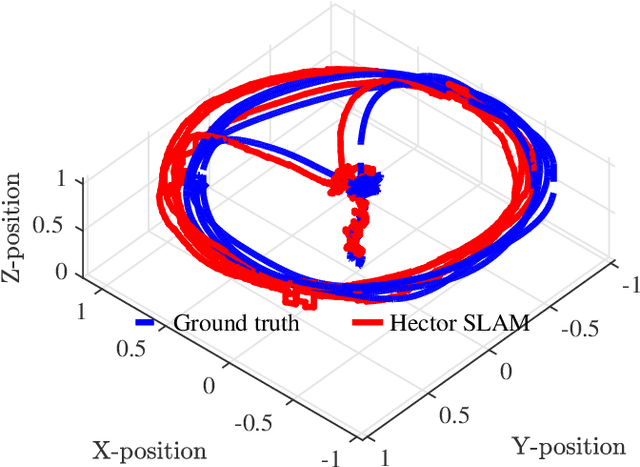

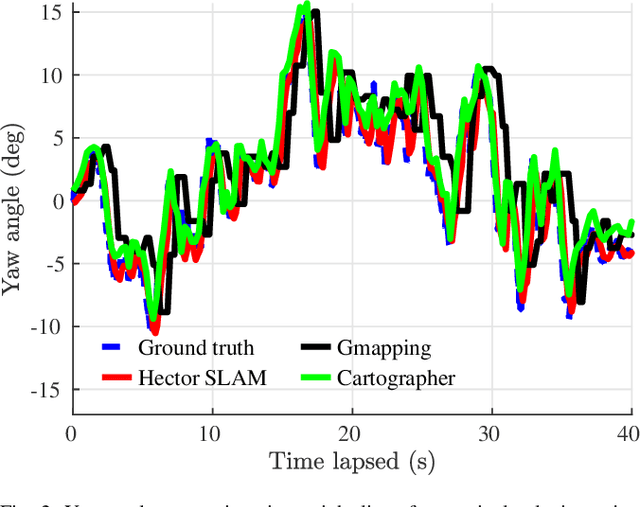

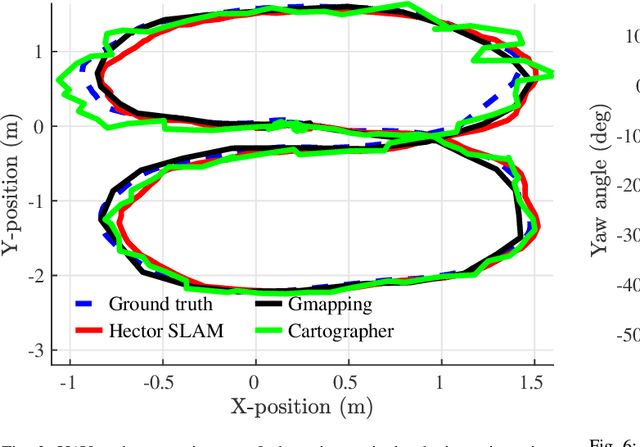

Abstract:Localization can be achieved by different sensors and techniques such as a global positioning system (GPS), wifi, ultrasonic sensors, and cameras. In this paper, we focus on the laser-based localization method for unmanned aerial vehicle (UAV) applications in a GPS denied environment such as a deep tunnel system. Other than a low-cost 2D LiDAR for the planar axes, a single axis Lidar for the vertical axis as well as an inertial measurement unit (IMU) device is used to increase the reliability and accuracy of the localization performance. We present a comparative analysis of the three selected laser-based simultaneous localization and mapping(SLAM) approaches:(i) Hector SLAM; (ii) Gmapping; and(iii) Cartographer. These algorithms have been implemented and tested through real-world experiments. The results are compared with the ground truth data and the experiments are available at https://youtu.be/kQc3mJjw_mw.

Accurate IMU Preintegration Using Switched Linear Systems For Autonomous Systems

Jul 19, 2019

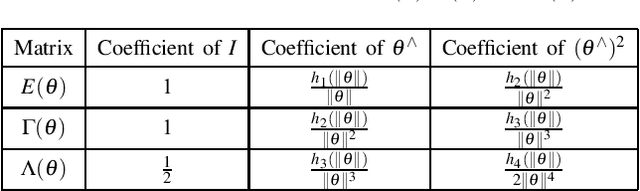

Abstract:Employing an inertial measurement unit (IMU) as an additional sensor can dramatically improve both reliability and accuracy of visual/Lidar odometry (VO/LO). Different IMU integration models are introduced using different assumptions on the linear acceleration from the IMU. In this paper, a novel IMU integration model is proposed by using switched linear systems. The proposed approach assumes that both the linear acceleration and the angular velocity in the body frame are constant between two consecutive IMU measurements. This is more realistic in real world situation compared to existing approaches which assume that linear acceleration is constant in the world frame while angular velocity is constant in the body frame between two successive IMU measurements. Experimental results show that the proposed approach outperforms the state-of-the-art IMU integration model. The proposed model is thus important for localization of high speed autonomous vehicles in GPS denied environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge